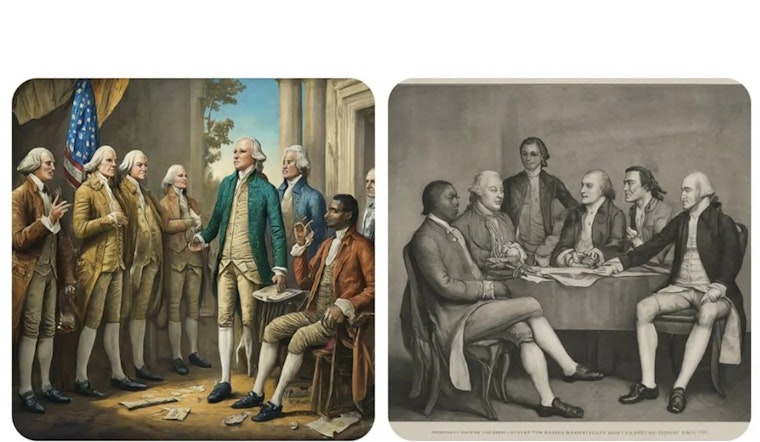

Google has halted its generative AI system Gemini's ability to create images of people following an eruption of controversy over depictions of historical figures, including what were termed "historical inaccuracies" such as racially diverse World War II-era Nazi soldiers and various non-white depictions of the American Founding Fathers. The tech giant conceded there were issues with the AI's creations, as reported by Variety. Critics called out the AI for its anachronistic and culturally insensitive outputs, questioning the algorithm's interpretation of history.

After the Gemini chatbot—a rebranded version of Google's Bard—went live and stirred heated reactions from users on Xitter for producing a slew of images that showed a racially diverse roster including Black Vikings and a woman masquerading as a Catholic Pope, alongside users like Elon Musk taking the opportunity to critique the chatbot for inserting "woke" depictions into historical settings, in turn, promoting his own chatbot's upcoming release Musk claims will avoid such "PC funny business," as detailed in a report by SFist. This uproar comes on the tail of earlier (2015) accusations of racial bias against Google, notably their Photos app's unfortunate tagging of a picture of two Black individuals as "gorillas," which Google ultimately addressed by pulling the primate identification feature from the app.

"We're already working to address recent issues with Gemini's image generation feature. While we do this, we're going to pause the image generation of people and will re-release an improved version soon," Google stated on Feb. 22 as the company began efforts to rectify the AI's problematic outputs, as per the information provided by Variety. This decision reflected the criticism aimed at Google for allowing their AI's desire to showcase diversity to infringe upon and distort historical realities.

Meanwhile, pundits have seized upon the controversy to lambaste the corporate culture at Google; computer scientist and investor Paul Graham called the Gemini-generated images "a self-portrait of Google's bureaucratic corporate culture," while right-wing commentator Ashley St. Clair linked the debacle to wider patterns in media, remarking, "What's happening with Google's woke Gemini AI has been happening for years in Media and Hollywood, and everybody who called it out was called racist and shunned from society," her comments gleaned from a post on X as told by Variety. Amidst the backlash, Google's search feature too has had its share of eyebrow-raising results over what images it spits out when queried about different kinds of couples, shedding light on the inherent challenges tech companies face while navigating the turbulent waters of representation and historical fidelity.

As users and commentators await Google's promised improvements to the Gemini system, there lies a growing concern over AI's role in historical representation and the broader implications of technological interpretations on our collective understanding of the past. With alternative AI chatbots on the horizon, as Musk heralds xAI's Grok, it remains to be seen how new entrants will tackle these complex issues and what impact their solutions will have on the unfolding discourse in this digital age.